A deep dive into artificial intelligence, deepfakes, and AI potential

Artificial Intelligence is all over the news. Not long ago, Nvidia — a leader in artificial intelligence — surpassed Amazon’s stock value, indicating a surge in demand. In many cases, AI is creating positive effects by doing things like helping to automate tasks and power research at a much faster rate. However, one concerning outcome of AI and machine learning is the unregulated development and dissemination of “deepfake” content.

Artificial Intelligence is all over the news. Not long ago, Nvidia — a leader in artificial intelligence — surpassed Amazon’s stock value, indicating a surge in demand. In many cases, AI is creating positive effects by doing things like helping to automate tasks and power research at a much faster rate. However, one concerning outcome of AI and machine learning is the unregulated development and dissemination of “deepfake” content.

What are deepfakes and why they are problematic

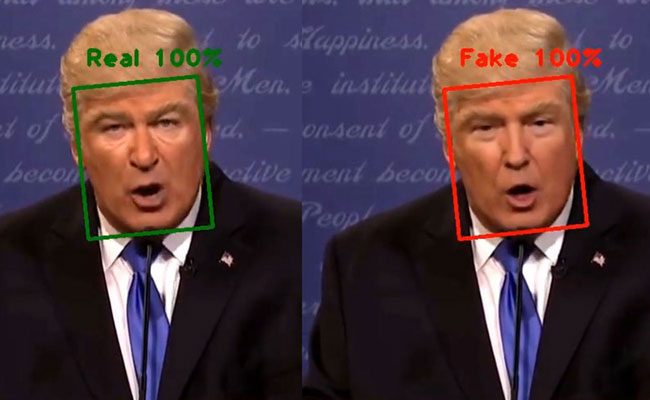

Deepfakes are created by individuals or organizations using AI algorithms to manipulate data such as images, videos, and audio recordings of a person or people. In some cases, AI is used to create helpful resources like professional headshots, but the darker side of AI revolves around malice and misinformation, where altered media is used to intimidate or harass the subject or spread lies.

X, formerly known as Twitter, briefly stopped searches of Taylor Swift after deepfake pornographic images of the singer started circulating on the internet. With the looming election, deepfakes of politicians are popping up all over the place, prompting lawmakers to regulate the phenomenon. In one case, a deepfake voice recording of Joe Biden was used to discourage Democrats from participating in the primary election, which could have swayed voting results. With outcomes like that, it’s easy to question whether AI is doing more harm than good.

Dr. Federica Fornaciari, an associate professor in the Strategic Communications programs at the National University, shared her thoughts on this type of technology: “ … It is pretty astonishing to witness how realistic these deepfakes can be, especially in a technology [sic] evolves at an incredible speed but obviously also raises critical concerns … They’ve become a hotbed for ethical and societal discussion. You know, from tarnishing the reputation of public figures to spreading misinformation, they can cause pretty significant challenges for media integrity and for public trust ultimately.” The creation of deepfakes is concerning not only because of the speed of its development but also because of its unchecked regulation.

Dr. Siwei Lyu, a SUNY Empire Innovation professor of computer science and engineering with the University of Buffalo told Yellow Scene Magazine that one of his greatest concerns is that “DeepFakes can escalate the scale and danger of online fraud and disinformation when used for deception to threaten our cognitive security. Similar to threats to our physical security or cybersecurity, which aims to break into our physical infrastructure or cyber systems, threats to cognitive security target our perceptual system and decision-making process. In particular, by creating illusions of an individual’s presence and activities that did not occur in reality, DeepFakes can influence our opinions or decisions.” Although deepfakes haven’t yet induced identifiable long-term change, they often create short-term chaos that can lead to disruptions and an erosion in trust in digital media, Dr. Lyu said.

Many of the problems deepfakes present are probably going to grow. “We will likely reach a tipping point where the production of fake content outpaces our ability to detect it. This could have several implications. With this advancement, we anticipate an increase in the volume and quality of fake content on social media and the internet. The speed of generation and distribution of this content may accelerate, potentially flooding our information ecosystem. Furthermore, we might see more comprehensive disinformation campaigns that combine multimedia and multi-site illusions. Deceptive narratives could be reinforced across texts, images, videos, and audio for more compelling storytelling. Additionally, we can expect more refined and subtle forms of manipulation targeting individuals, businesses, and government agencies,” said Dr. Lyu. Even if deepfake technology can be regulated, it will likely happen at a slower rate than the development of the technology.

With the potential growth of problems surrounding the volume of deepfake content, the speed of use, and the rise of disinformation, the technology has the potential to disrupt social platforms in a harmful way.

Why deepfake technology is useful

The development of a deepfake relies on deep learning, where an algorithm receives numerous examples which are then used to create similar images or footage. The algorithm works with an opposing algorithm to improve the final rendering. In some cases, the results can be concerning or damaging. However, the same technology that is being used to generate deepfakes can also accomplish tasks like animating historical photographs, repairing old video, or creating footage that can be used for educational purposes.

In one scenario, the Malaria Must Die campaign used deepfake technology to create a video featuring David Beckham speaking in nine different languages, encouraging viewers to petition to end malaria. This application demonstrates the potential to leverage deepfake technology for positive purposes.

While the rise of deepfake content comes with an uproar of ethical and social concerns, the technology that it uses is revolutionary. According to Dr. Lyu, “The generative AI technology behind the production of DeepfFakes has many beneficial uses. Examples include immersive communication … and faster video streaming … reducing cost and effort in the movie and advertisement industry, and rehabilitation efforts for stroke patients and individuals with hearing impairment.”

The legality surrounding deepfake creation and distribution

The creation and use of most deepfakes is not currently illegal across the United States, but state laws vary. The state of Minnesota recently made it illegal to create and distribute deepfakes in cases where political ramifications and pornography creation are in question. Additionally, Georgia, Hawaii, Virginia, Washington, and Wyoming have laws in place that regulate these fake videos and images.

However, lawmakers are beginning to evaluate whether or not there should be some restrictions surrounding these images and videos, particularly when it comes to nonconsensual porn. Most recently, a bipartisan bill was introduced that would allow victims to sue those who create deepfakes, knowing that there was no consent in their making. The bill came shortly after deepfakes of Taylor Swift were distributed across the internet. At this time, ten states already have laws in place that create this type of protection. The new bill would create federal protections.

Who suffers the most from deepfake usage?

One of the greatest concerns surrounding deepfake usage is how and who it may impact. While no one is immune to the use of deepfakes, this type of technology has the potential to perpetuate stereotypes and incriminate marginalized communities.

Dr. Fornaciari stated, “Marginalized communities are particularly at risk. You know, deepfakes can exacerbate existing inequalities, they can target those who are already disproportionately disadvantaged. Manipulated content can, you know, amplify hate speech. It can fuel discrimination, it can perpetuate false narratives and further make it even more problematic for social progress to happen. Right, so we’ve seen the consequences of deepfakes in so many domains. In humanitarian crises or emergency situations, deepfakes can hinder relief efforts. They can exacerbate confusion. Humanitarian aid workers and those who are affected by the crisis could encounter challenges in delivering and receiving accurate information. It is scary, you know.”

The side effects of using deepfakes to create false narratives could be catastrophic for some parties. “These are all troubling concerns, you know, deepfakes can so easily be exploited to target and harm different communities, different individuals. Again, particularly public figures but also vulnerable communities. So there are very serious consequences for the individual and for society. So media authenticity is at stake. Consumers who rely on accurate information, and incredible sources to make their informed decisions, can encounter problems there. Of course, reputation and identity can be at stake. You know, when deepfakes portray someone engaging in actions or they never did, these manipulations can have far-reaching personal and professional consequences,” said Dr. Fornaciari.

Dr. Lyu agreed that deepfakes can be dangerous and that no one is safe from their impacts. “Deepfake attacks can target anyone. The particularly vulnerable demographics include older adults, to whom DeepFake audios can often be exploited for financial scams targeting them (e.g., grandparent scams). Women and girls are also victims of defamation attacks using DeepFakes, e.g., revenge pornography.” In most American states, the legal parameters for deepfake use have not yet been established, which makes it difficult to protect against exploitation.

The era of fake news and compromised media trust

The spread of “fake news” isn’t anything new. Some estimates suggest that the spread of disinformation has been happening for centuries, although the term for it wasn’t established until the 1890s. As technology evolves, however, fake news seems to be getting more and more convincing, and deepfakes are a great example of the harm that it can cause. As this type of tool becomes more wide-spread, so is the general mistrust of the media.

Dr. Fornaciari reflected, “One primary concern for sure revolves around the potential for misinformation and deception. As we have said, deepfakes can pretty convincingly fabricate content. They blur the line between reality and unreality. So media consumers need to exercise caution in trusting sources. They need to develop critical thinking skills. They need to verify the authenticity of the content that they encounter and they share because there is another component here to remember.” Now more than ever, being able to verify that a media source is trustworthy and likely to convey accurate information is vital. While regulation is part of the equation when it comes to deepfakes, so is personal responsibility. Learning how to detect deepfakes can slow or stop the progress of disinformation.

Distinguishing deepfakes from real images and videos

Artificial intelligence has a shocking ability to fabricate content, which can make it difficult to determine what virtual information is trustworthy. As AI advances, the images and videos that it creates will likely be harder to evaluate for misinformation.

Dr. Fornaciari said, “… Distinguishing authentic content, genuine content, from manipulated content becomes increasingly challenging, and this goes without saying, it can lead to skepticism or people question whether you know every piece of media they encounter is authentic or fabricated. So society may witness a pretty concerning decline in the credibility of video evidence, which can have consequences, you know, in the legal context, or they can create obstacles in determining the truth, hindering the pursuit of justice.” While historically, videos have been used as evidence in court, it is likely that the rise of deepfakes could impact this type of evidence in the future.

Fortunately, as deepfakes become popular, some industry professionals are researching ways to monitor it. Dr. Lyu reflected, “We developed DeepFake detection methods for two primary purposes: triage and evidence. A DeepFake detector used for triage screens out a smaller subset of likely DeepFakes from a large number of images, audio, and videos for closer scrutiny. For triage detectors, the primary metric is detection accuracy, minimal human intervention, and run-time efficiency. As such, they are often implemented with data-driven, AI-based methods, directly employing machine learning models trained on real media and DeepFakes to classify them.” Being able to detect and evaluate deepfakes is a vital piece of the equation when it comes to the management of them.

As artificial intelligence progresses and continues to learn, it’s likely that deepfakes will become more difficult to detect. However, there are some clues that currently allow individuals to recognize them. According to MIT, individuals who scour the internet might also look for clues like facial abnormalities, especially to the cheeks, forehead, skin, and eyes. One might also evaluate an individual’s glasses for glare, facial hair, and moles. In videos, blinking and lip movements can also provide clues as to whether or not it is a deepfake.

Future management of deepfakes

When it comes to the future of deepfake management, the path isn’t entirely clear. However, most experts agree that regulators need to come up with some type of management plan. “That’s something that we all need to work on, like policymakers, the legal systems, the individuals, the, you know, educators everyone should be involved. The technology companies, every. Everyone needs to work together to make sure that deepfakes are you know, that this technology is used in ethical ways, that this technology fulfills a need rather than create a problem,” said Dr. Fornaciari.

However, it isn’t entirely surprising to find that the technology is making leaps and bounds faster than it can be regulated. “Technology is always evolving faster than the legal system and the regulation, so it can be a little difficult for the legal system to keep up. But governments could explore policies that require explicit disclosure of deepfake content, for instance, and penalties for those who create and disseminate malicious deepfakes with harmful intent. But again, it’s a very fine line. It’s complicated to be able to create regulations that don’t hinder the creation while protecting the possible harm,” she added. While it’s clear that some amount of regulation could be beneficial in the management of deepfakes, too much regulation could stunt technological advancements.

The malicious intent that often comes with the development of deepfakes is the issue that most needs to be targeted. “Again, you know, fighting the spread of malicious deepfake content to make sure that the integrity of the media is preserved is a critical challenge, and it requires a multifaceted approach, for sure,” said Dr. Fornaciari.

Dr. Lyu’s perspective surrounding deepfake management involves some level of restriction as well: “… The current policies and regulations need to be more precise in delineating innocent and malicious use of synthetic media as the generative AI technology and synthetic media are dual-used, unless in extreme cases of misuse (e.g., generating targeted pornography videos to defame an individual), banning or restricting the technology may lead to unwanted side effects.”

Dr. Fornaciari suggests ways forward: “… Raising awareness, promoting media literacy, is crucial. You know, as media consumers, we must be equipped with the knowledge and with the tools to identify potential deepfakes. You know, by fostering critical thinking skills, we can better discern what’s authentic and what’s manipulated. Collaboration between technology companies, researchers, policymakers — everyone involved is also crucial,” said Dr. Fornaciari.